The public cloud computing market has become a multi-billion-dollar business, and, as it continues to grow, enterprises are leveraging it more and more. According to a report by International Data Corporation (IDC), the cloud market is expected to gain a compound annual growth rate of 21.9% with spending on public cloud services adding up to $277 billion by 2021.

Cloud computing migration involves identifying the right workloads to move to the public cloud. This decision process is not easy since there are dozens of business-critical apps supportingthe typical enterprise. Once IT managers see the agility and operational efficiencies available in the cloud, they may be tempted to migrate as many apps as possible. Public clouds provide a particular economic model for computing that may work well for some but not all workloads, so the trick is to decide on the right ones and avoid any cloud computing migration issues.

So here is a list of three basic cloud workload types you can consider when migrating to a public cloud.

1. Scalable Web Applications

Many externally facing web applications have the potential to expand unpredictably as user traffic balloons. A sudden rise in traffic requires your computing infrastructure to exponentially scale at an incredible rate in order to manage the extra workload. If your infrastructure is not able to provision fast enough, then it is running head long into a “success disaster.” You will not be able to monetize or capitalize on the high traffic volume because your infrastructure simply can’t keep up.

Ideally, the scalable infrastructure of a Public Cloud will help you avoid cloud computing migration issues. Web-based applications running on a Platform as a Service (PaaS) or Infrastructure as a Service (IaaS) are provisioned automatically and can respond to the peaks without human intervention. Your public cloud provider is able to give your hosted application access to unlimited on-demand resources – so as you experience spikes in traffic; you are able to consume a greater portion of their entire resource pool. And since most cloud computing service agreements don’t initially provide all the infrastructure needed to cope with a peak load, you only pay for the resources that you actually use.

2. Batch Processing

Tasks like encoding and decoding data streams are large batch jobs that demand a great deal of processing power. In batch processing, often time is of the essence; hence the aim is to assign all available resources to the task and then pass the results on to another part of the operation. Virtualized servers (using VMware or an equivalent middleware) can be dedicated to the process of encoding the jobs at hand and then, depending on the queue length,vSphere (or an equivalent management environment) can successively load additional Virtual Machines (VMs) in the cloud and automatically add servers, as needed, to meet workload demand.

A Practical Example:

If there are hundreds of jobs waiting in the queue and each server can process only one job at a time, then, with batch processing, the system is able to spin up from one to ten servers in the cloud to speed up the total processing elapsed time. So, you are able to get your results returned much faster by parallelizing and batch processing thisqueue of jobs.Renting ten servers for an hour each or one server for a total of ten hours will usually cost the same but it doesn’t cost you any extra to reduce your overall processing time because of the utility feature of the cloud.

3. Disaster Recovery

In case of a failure in the hardware systems installed in your corporate data center, cloud security tools can include disaster recovery services that will maintain the necessary resource configuration sets in a different data center at a different location. Instead of maintaining your own standby to backup your production-quality infrastructure, you simply invoke your disaster recovery plan and avoid any unexpected downtime. By implementing a disaster recovery plan with a public cloud provider, you are able to attain the advantage of geographic diversity.

Practical Example:

On either the public cloud or your primary corporate data center, you can bein production with your entire infrastructure – servers, load balancers, databases, etc. Then, at the disaster recovery site, you will only need to maintain (and pay for) a replicated secondary database to minimize expenses (or multiple databases if required to support your workload).Your secondary computing infrastructure will not be charged to you until your disaster recovery plan is invoked.

Similarly, cloud virtualization and automation tools will help you to execute a smoother failover when you are trying to get all the bits of your production infrastructure up and communicating with one another after a disaster event. Automation tools also make it easy for you to enhance and test your disaster recovery process regularly without a massive investment in hardware.

To implement the workloads mentioned above, there are two primary strategies you can consider when migrating business-critical applications to a Public Cloud.

- Lift and shift

Lifting and shifting is a strategic approach that you can use to migrate virtualized applications to the cloud. It moves an application and its associated data to a public cloud because it runs on the same middleware (e.g., VMware) in either setting. There is no need to re-write or redesign the application.

- Cloud-native development

The alternative to lifting and shifting is redesigning the apps to become cloud-native and this usually takes quite a long time to complete. It is an intricate process that requires an investment in splitting up a monolithic application and moving it to a more services-separated architecture.

How do cloud architects decide which of the two migration strategies mentioned above is more suited to a corporation’s business objectives?Typically, a SaaS vendor will bank on an improved customer experience to gain more subscribers. They will make use of all the utilities and services available as part of the Public Cloud infrastructure. This can include software development and testing tools, data visualization tools and tight integration with mobile devices. However, cloud-native development option requires a re-write of the application to best utilize its innovations.

On the other hand, companies who have already virtualized an application and are simply looking for a hybrid computing architecture involving an external public cloud provider will usuallyuse a facility such as VMware Cloud on AWS. It enables their virtualized application to be brought seamlessly toAmazon Web Services (AWS) public cloud. The advantage of a “lift and shift” option is that the onboarding process is minimal. The management of the vSphere environment on AWS is very familiar to the command and control methodologies they already have experienced in their corporate data center. This option also enables a company to institute a hybrid cloud strategy to avoid any cloud computing migration issues. In this way, the same application can be running on computing resources that are both on-premises as well as on the Public Cloud.

Once you have drawn up a list of potential workloads to migrate to a public cloud, your cloud computing migrationplan needs to be further vetted with a cross-disciplinary team that should include representatives from the IT security team, compliance engineers and business unit stakeholders.

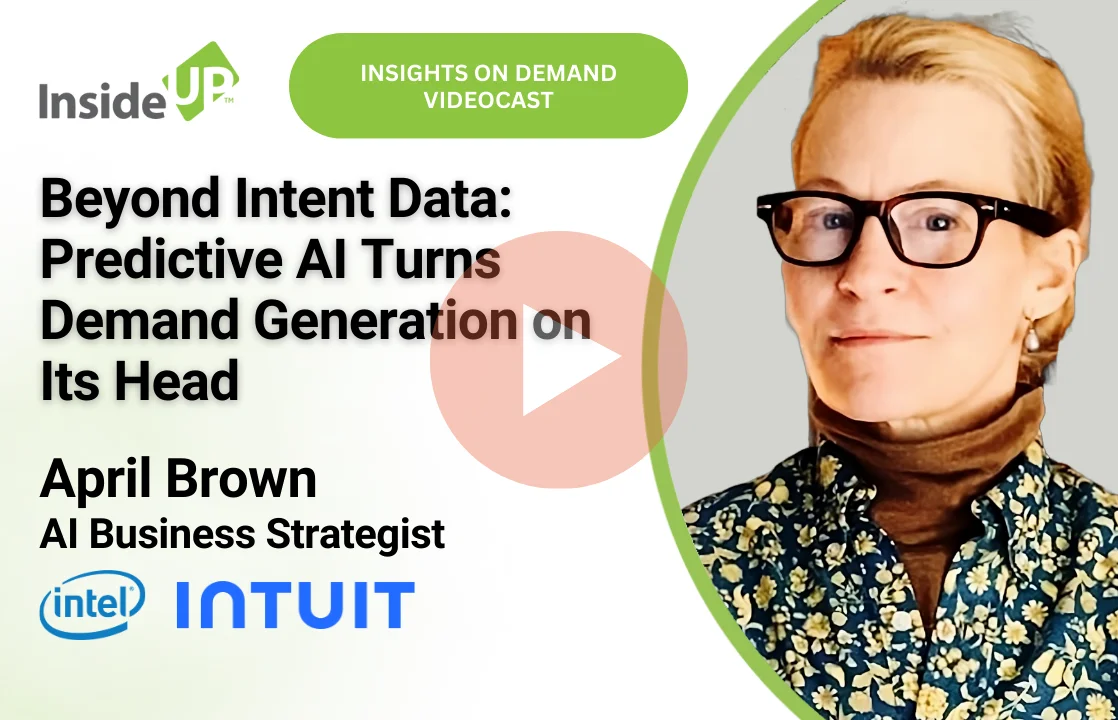

InsideUp, a leading demand generation agency, has over a decade of experience assisting technology clients, that target mid-market and enterprise businesses, by meeting and exceeding their key marketing campaign metrics. Our clients augment their in-house demand generation campaigns (including ABM) by partnering with us to build large sales pipelines. Please contact us to learn more.